There’s obvious hype about using AI in content work: it streamlines content workflows, speeds up content development, and scales delivery. But often, we aren’t as keen to acknowledge the risks. There’s much that can go wrong, from content that violates compliance rules to personal or proprietary information being exposed to public AI models.

Content teams are often among the first to put AI to work in an organization. We’re already using GenAI to generate summaries, drafts, snippets, and structures. We’re producing prompt templates and training models. But despite us being early adopters of AI in our own work, we are often among the last to be included in enterprise-level governance conversations.

Drafting an AI usage policy for content may seem straightforward until you realize the numerous stakeholders, unknowns, and edge cases you’re dealing with. If you’re part of a content or content-adjacent team in an organization (regardless of size), a risk management framework can be a valuable foundation.

Risk management defines how you think about and manage risks, while a policy formalizes what you tell people to do (or not do) as a result of that thinking. By starting with evaluating and managing risk, you’ll find an AI content policy is easier to progress in your organization (and a lot more rigorous).

Choose the right risk management framework

As generative AI becomes embedded in organizations, content leaders are under pressure to codify its use. But with technology that’s new and rapidly changing, there are few precedents. Risk management can help to lay a solid foundation.

According to IBM, risk management is the process of identifying, assessing, and addressing any financial, legal, strategic, and security risks to an organization. The ISO 31000 standard offers a well-established methodology for managing risk, but it’s not the only option. There are slightly simplified versions, like the NIST Risk Management Framework or COSO Enterprise Risk Management Framework. The key is to choose an approach that matches your organizational culture and resources.

In my consultancy to organizations of various sizes, I’ve found that a risk management framework results in benefits for the content team and the wider organization. For example, considering an AI content policy as an instrument for enterprise risk management gives us the ability to strategically align it with the organization’s broader framework. This approach gives content teams a voice in governance discussions by framing concerns in a way that leadership, IT, legal, and other stakeholders understand.

Get more valuable content design articles like this one delivered right to your inbox.

The risk management process: 6 standard steps

No matter which risk management framework you choose, the following six steps are likely to apply.

1. Establish the context

As content experts, we all know the importance of context. Here, we define the operational and strategic context for GenAI in your content workflows. The purpose of this step is to ensure that the content policy isn’t developed in isolation from enterprise-wide risk management.

You can go through these steps in any order and add any context specific to your industry or organization:

- Set out your organization’s strategic objectives and risk appetite. Are you in a highly regulated industry with low risk tolerance or a tech-forward company that values innovation over perfection?

- Map the regulatory landscape, such as AI laws or industry regulations and norms (e.g., GDPR, CCPA, or the EU AI Act)

- Formalize collaboration channels with Legal, Compliance, InfoSec, and Brand Governance teams

- Clarify the scope of GenAI use across:

- Content responsibilities (e.g., Product, Marketing, Legal, HR, Customer Service)

- Content types (e.g., in-app microcopy, thought leadership)

- User roles (e.g., editors, strategists)

- Connect GenAI use to existing content governance by asking how GenAI fits within your style guides, brand standards, and approval workflows

I’ve found it useful to develop a shared glossary at this point. “AI-assisted,” “AI-generated,” “human in the loop,” and “responsible AI” do not mean the same thing to different groups.

For smaller teams: Focus on mapping your current content processes and identifying where GenAI might intersect with sensitive areas like customer data or compliance requirements.

2. Identify the risks

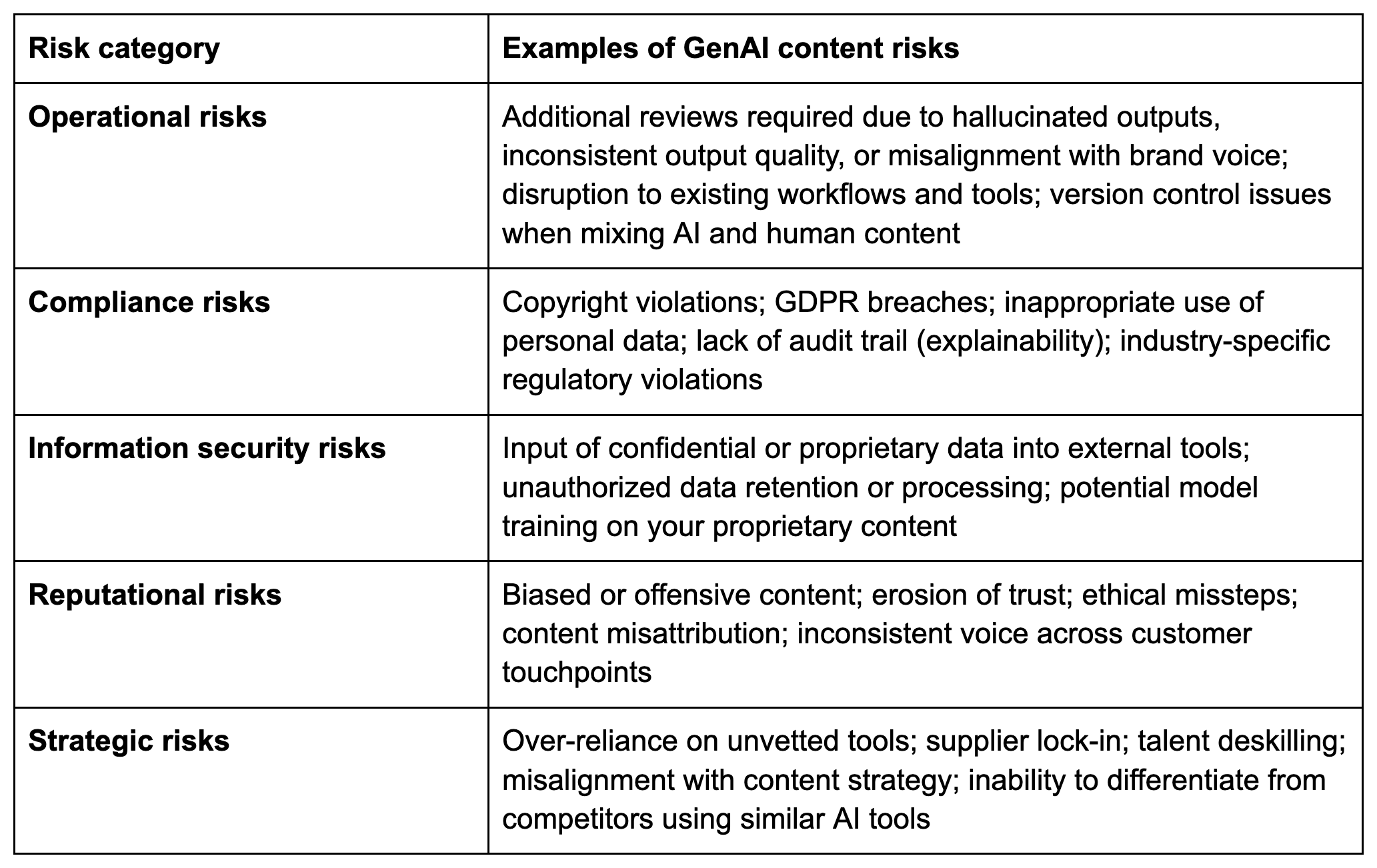

Here, consult as broadly as possible to identify a range of risks. You can use these categories as a starting point:

When going through this process, keep in mind the implications of the model you use and how AI is deployed. For example, your choice of an open vs. closed model, an API vs. web interface, or a private RAG pipeline vs. a public knowledge base will have a substantial impact on the level of risk and control you have.

Involve legal, compliance, security, and business stakeholders in workshops or interviews. Document each risk in a GenAI-specific risk register, including the risk owner and impacted systems or teams.

For content teams without risk expertise: Use simple brainstorming sessions with prompts like, “What could go wrong if we use AI for our customer email responses?” to capture concerns.

3. Analyze the risks

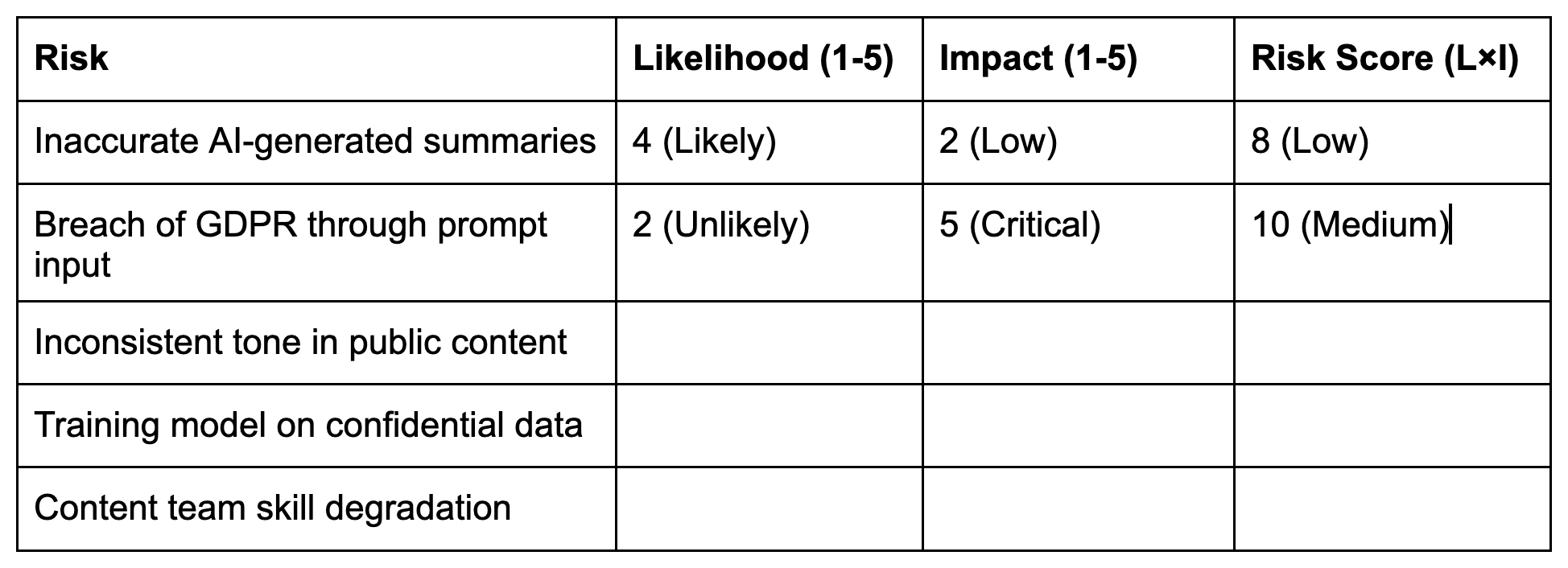

Once risks are identified, rate each risk on two scales:

- Likelihood: How probable is this risk based on current behavior and controls?

- Impact: What happens if it does occur?

This is a very useful exercise, as it enables you and your team to understand the risks in more depth. Use a risk matrix to score and prioritize. The first two risks are assessed as examples below. How would you assess the others in this list?

The risk score is calculated by multiplying likelihood by impact. Generally:

- Scores 1-8: Low risk (may require monitoring)

- Scores 9-15: Medium risk (requires attention and controls)

- Scores 16-25: High risk (requires immediate action and strong controls)

This stage informs which controls are worth investing in. For example, a rare but critical risk (e.g., legal liability) may warrant more mitigation than a frequent but low-impact issue.

For smaller teams: Identify your top three risks rather than creating a full list.

4. Evaluate the risks

This is your decision point. Your evaluation must align with your context and especially your organization’s overall risk appetite. For each risk, ask:

- Do we accept it? Some risks are tolerable, and monitoring may be enough.

- Do we need more controls? Extra processes or tools may be needed to lessen the likelihood or impact.

- Should we stop doing this activity? Some uses may be so risky that you need to avoid them completely.

Document your decisions: Create a clear record of which risks you’ve decided to accept, mitigate, or avoid, and the reasons for each decision.

5. Treat the risks

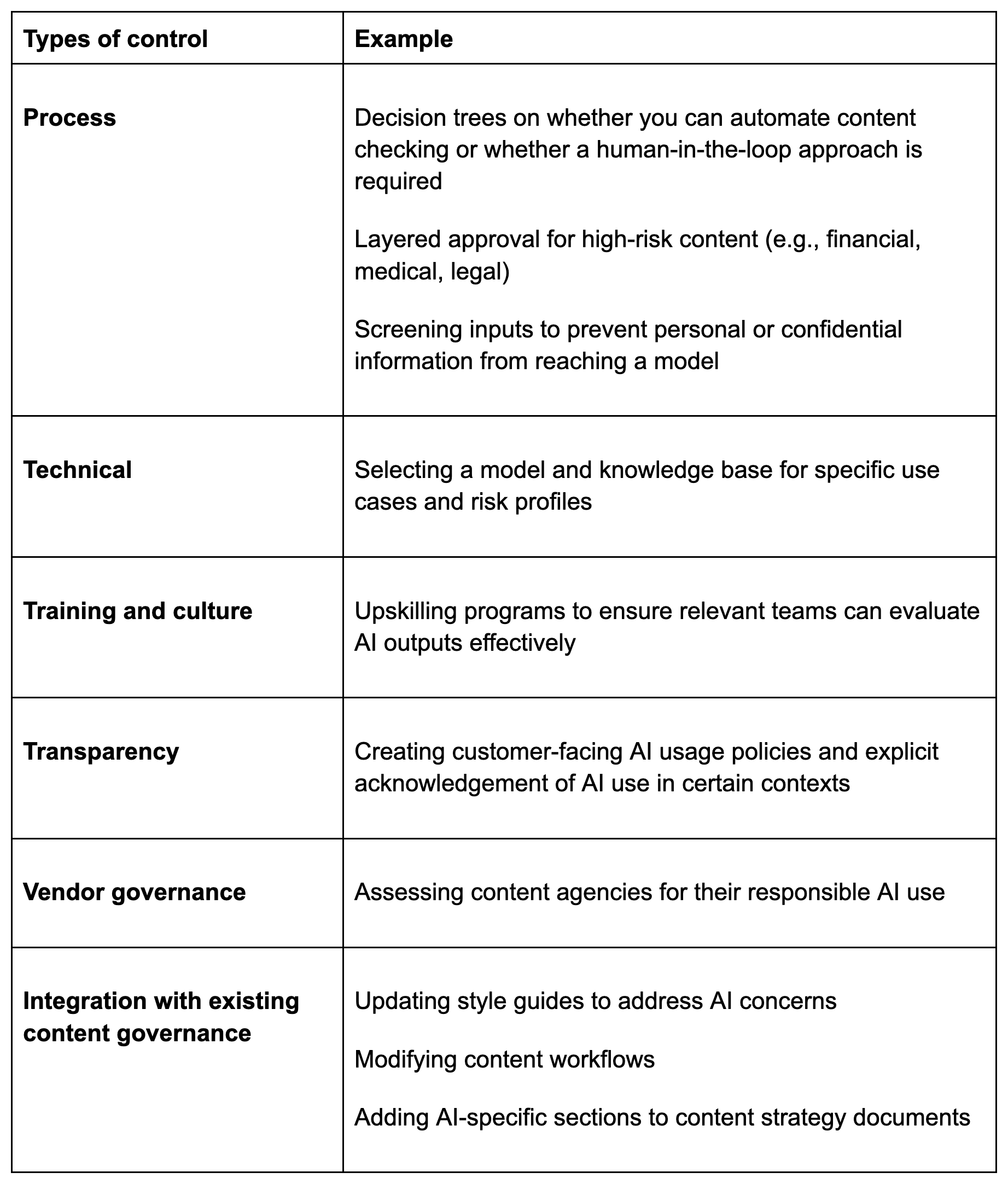

Now, define and implement the controls for each risk. Here are some examples:

These controls must be proportionate and actionable. This is a core piece of content that will become a crucial component of your content policy.

For content teams with limited resources: Begin with the highest-risk items and implement simple controls first, such as human review requirements or clear usage guidelines. Build more sophisticated controls as your AI usage matures.

6. Monitor and review

Now, set your metrics so that you know what success looks like. You can use metrics such as:

- Content quality (heuristic) scores before and after AI implementation

- Error rates in AI-generated content by category

- Review time required for AI content vs. human-generated content

- Percentage of content requiring substantial rework

- Content team satisfaction and confidence levels

Be sure to review your risk management framework regularly and keep your risk register updated in the event of changes in regulations, technology, or your organization. Offer ways for your content team — and the broader organization — to report their concerns. The idea is to continuously improve the framework, so it’s important to ensure that your team understands how to do this and has the available time.

Lastly, make sure you have a strategy for keeping stakeholders updated on your risk management framework. For example, content teams should develop simple dashboards or reports that highlight key risk indicators and policy effectiveness.

Tips for your next step: Drafting your AI content policy

So far, we’ve focused on risk management. Once this exercise is in progress, you’ll feel ready to start your policy. Here are some considerations for your next step:

- Connect to value, not just risk. While this framework focuses on risk management, the most successful GenAI content policies also articulate how controlled AI use creates value through efficiency, creativity, and scale. Balance risk mitigation with enabling appropriate innovation.

- Advocate for content expertise. In policy discussions dominated by legal and IT perspectives, content teams need to assert their unique understanding of voice, tone, and audience expectations. Frame content concerns in terms of organizational risk and opportunity to ensure they’re taken seriously.

- Use real examples. Bring specific use cases into the room, like “using ChatGPT to summarize a financial report” or “generating social media copy in bulk.” Create a library of examples that illustrate both acceptable and unacceptable uses.

- Be explicit about roles. Who reviews what? Who’s accountable if it goes wrong? The answers must be clear in the policy, not implied.

- Don’t try to release all of it at once. There will be some parts of the policy that take longer than others. Prioritize high-risk areas and quick wins, then build from there.

- Prioritize what’s important to you. For smaller teams or organizations, focus on principles and simple controls rather than elaborate frameworks. Start with a one-page policy that covers your highest-risk activities, then expand as needed.

Risk management: The cornerstone of an effective AI content policy

If you use generative AI, risk management is a way to catalyze the development of a rigorous content policy. It will give you the substance of what controls are needed and make it easier for you to consider both risk and opportunity in a calm and logical way. It will make the basis of your content policy transparent internally and externally. And it will lay a foundation for successful alignment with compliance, leadership, and other stakeholders.

As with all things AI, it’s not the tools that will determine your success or failure. It’s the way teams come together to use those tools in a coordinated and strategic way. Identifying and controlling your risks and creating a strong content policy will be your first step in making AI work for you, your content team, and your organization.